How will you interact with the Internet of Things in your smart home of the future? Perhaps by looking your connected air conditioning unit in the lens from the comfort of your sofa and fanning your face with your hand to tell it to crank up its cooling jets.

At least that’s the vision of Italian startup Cogisen, which is hoping to help drive a new generation of richer interface technology that will combine different forms of interaction, such as voice commands and gestures, all made less error prone and/or abstract by adding “eye contact” into the mix. (If no less creepy… Look into the machine and the machine looks into you, right?)

The startup has built an image processing platform, called Sencogi, which has a first focus on gaze-tracking — with plenty of potential being glimpsed by the team beyond that, whether it’s helping to power vision systems for autonomous vehicles by detecting pedestrians, or performing other specific object-tracking tasks for niche applications as industry needs demand.

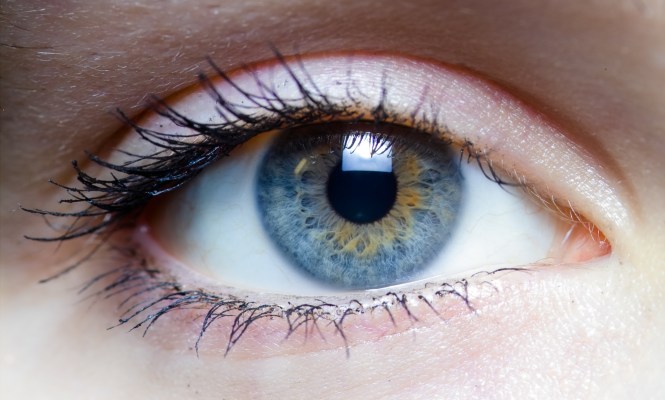

But it’s starting with tracking the minuscule movements of the human iris as a basis for a new generation of consumer technology interfaces. Although it remains to be seen whether the consumers of the future will be won over to a world where they are expected to make eye contact with their gadgetry in order to control it, rather than comfort-mashing keys on a physical remote control.

“Voice control, voice recognition, works really, really well — it’s getting more and more robust… But interfaces certainly become a lot more natural if you start combining gaze tracking with voice control and gesture recognition,” argues Cogisen CEO and founder Christiaan Rijnders. “[Human interactions] are with the eyes and speech and gestures so that should be the future interaction that we have with our devices in the Internet of Things.”

“We are not saying gaze tracking will substitute other interfaces — absolutely not. But integrating them with all the different interfaces that we have will make interactions more natural,” he adds.

The startup has been developing its gaze tracking algorithms since 2009, including three years of bootstrapping prior to pulling in VC funding. It has just now attracted a bridge funding round from the EU, under the latter’s Horizon 2020 SME Phase II Funding program, which aims to support startups at the stage when they are still developing their tech to prime it to bring to market.

Rijnders claims Cogisen’s gaze tracking algorithms are proven at this point, after more than five years of R&D, although he concedes the technology itself is not yet proven — with the risk of eye-tracking interfaces being perceived by tech users as gimmicky. i.e. “a solution looking for a problem” (if you’ll pardon the pun). That’s why its next steps now with this new EU financing are exactly to work on making a robust case for why gaze tracking could be really useful.

For the record, he discounts an earlier-to-market consumer application of eye tracking in Samsung Galaxy S4 smartphone as “not eye tracking,” “not 100 percent robust” and “quite gimmicky.” Safe to say it did not prove a massive hit with smartphone users…

“There’s a legacy that we have to live with which is that things were — especially a few years ago — rushed onto the market,” he says. “So now you have to fight the perception that it has already been on smartphones… and people didn’t like it… So the market is now very careful before they bring out something else.”

Cogisen is getting €2 million under the EU program, which it will use to develop some sample applications to try to convince industry otherwise — and ultimately to get them to buy in and license its algorithms down the line.

Although he also says it’s push from industry that is driving eye tracking R&D, adding, “It’s industry coming to us asking if we have the solution.”

The greatest push for eye tracking is coming from Internet of Things device makers, according to Rijnders — which makes plenty of sense when you consider one problem with having lots and lots of connected devices ranged around you is how to control them all without it becoming far more irritating and time-consuming than just twiddling a few dumb switches and dials.

So IoT is one of the three verticals Cogisen will focus on for its proof of concept apps — the other two being automotive and smartphones.

He says it’s positioning itself to address the general consumer segment versus other eye-tracking startups that he argues are more focused on building for B2B or targeting very specific use-cases, including that most creepy-of-all gaze tracking goal: advertising.

“The algorithms are proven. The technology itself and the applications still has to be proven. It has to be proven that you are willing to put your remote control in the bin and interact with your air conditioning unit combining voice control and gaze tracking,” he adds.

He names the likes of Tobii, Umoove, SMI and Eye Tribe as competitors, but, unlike these rivals, Cogisen is not relying on infrared or additional hardware for its eye-tracking tech, which means it can be applied to standard smartphone cameras (for example), without any need for especially high-res camera kit either.

He also says its eye-tracking algorithms can work at a greater range than infrared eye-trackers — currently “up to about three to four meters.”

Another advantage he mentions versus infrared-based technologies is the technology not requiring any calibration — which he says offers a clear benefit for automotive applications, given that no one wants to have to calibrate their car before they can drive off.

Eye tracking (should it live up to its accuracy claims) also holds more obvious potential than face-tracking, given the granular insights you’re going to glean based on knowing specifically where someone is looking, not just how they have oriented their face.

It’s easy for a car to decide to take away control from you — but it’s very hard for the car to decide when to give you back control.

“Vehicles will never be 100 percent autonomous. They’ll be decreased degrees of autonomy. It’s easy for a car to decide to take away control from you — but it’s very hard for the car to decide when to give you back control. For that they need to understand your attention, so you need gaze tracking,” adds Rijnders, discussing one potential use-case in the automotive domain.

When it comes to the accuracy, he says that’s dependent on the application in question and training the algorithms to work robustly for that use-case. But to do that, Cogisen’s image processing tech is being combined with machine learning algorithms and a whole training “toolchain” in order to yield the claimed robustness — automating optimizations based on the application in question.

So what exactly is the core tech here? What’s the secret image processing sauce? It’s down to using the frequency domain to identify complex shapes and patterns within an image, says Rijnders.

“What our core technology can do is recognize shapes and patterns and movements, purely in the frequency domain. The frequency domain is used a lot of course in image processing but it’s used as a filter — there’s nobody, until now, who has really been able to recognize complex shapes purely within the frequency domain data.

“And the frequency domain is inherently more robust, is inherently easy to use, is inherently faster to calculate with — and with this ability suddenly you have the ability to recognize much more complex patterns.”

Timeframe wise, he reckons there could be a commercial application of the gaze-tracking tech in the market in around two to three years from now. Although Cogisen’s demo apps — one in each vertical, chosen after market analysis — will be done in a year, per the EU program requirements.

Rijnders notes the current stage of the tech development means it’s facing a classic startup problem of needing to show market traction before being able to take in another (bigger) tranche of VC funding — hence applying for the EU grant to bridge this gap. Prior to taking in the EU funding, it had raised €3 million in VC funding from three Italian investor funds (Vertis, Atlante and Quadrivio).

Rijnders’ background is in aerospace engineering. He previously worked for Ferrari developing simulators for Formula 1, which is where he says the germ of the idea to approach the hard problem of image processing from another angle occurred to him.

“There you have to do very non-linear, transient, dynamic multi-physics modeling, so very, very complex modeling, and I realized what the next generation of algorithms would need to be able to do for engineering. And at a certain point I realized that there was a need in image processing for such algorithms,” he says, of his time at Ferrari.

“If you think about the infinity of light conditions and different types of faces and points of view relative to the camera and camera quality for following sub-pixel movement of the irises — very, very difficult image processing problem to solve… We can basically detect signal signatures in image processing which are far more sparse and far more difficult than what has been possible up to now in the state of the art of image processing.”