As containers gain popularity for a broad variety of use cases, entrepreneurs and infrastructure software investors are focused on investing in the machinery around containers. But there is a particular notion that is emerging, which needs a name. Today I’m proposing that we start using the term container-native to refer to this notion.

I researched (googled) the term to learn how it was being used today. Turns out it is being used to refer to the idea of running containers on bare metal (rather than on VMs).

What a narrow use of a beautiful term! There should be a new definition for container-native that aims to better represent the magnitude of impact that containers will have on software development and operations.

con·tain·er na·tive

kənˈtānər/ ˈnādiv/

adjective

- software that treats the container as the first-class unit of infrastructure (as opposed to, for example, treating the physical machine or the virtual machine as the first-class unit)

- software that does not just “happen to work” in, on or around containers, but rather is purposefully designed for containers

The shift to container-native computing is a once-a-generation shift.

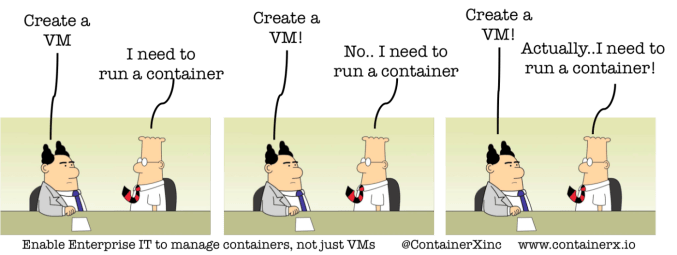

Just as in other once-a-generation shifts, legacy players rarely make the transition in a meaningful way. This happens for a few reasons: either (a) they don’t understand the magnitude or significance of the shift, or (b) they understand it but are stuck selling the wrong architecture and have incentives to treat aspects of the new architecture as check-box items in their messaging to the market, or (c) they are annoyed or disillusioned by the early overhype.

To illustrate what container-native can mean from a variety of angles, here are quick examples in (i) packaging, (ii) continuous integration and deployment, (iii) application lifecycle management (ALM), (iv) queueing and lambda architectures, (v) monitoring, and (vi) security.

Packaging

Joe Beda (formerly of Google, now an EIR at Accel, and advisor to Shippable and CoreOS) argues that the container community has focused heavily on environments to host containers (such as CoreOS and others), and tools to orchestrate containers (such as Docker Swarm, Kubernetes, Mesosphere and others), but not enough on tools to better understand what’s going inside the container itself. He calls out the following specific problems:

- No package introspection. When the next security issue comes along it is difficult to easily see which images are vulnerable. Furthermore, it is hard to write automated policy to prevent those images from running.

- No easy sharing of packages. If [two] images install the same package, the bits for that package are downloaded twice. It isn’t uncommon for users to construct complicated “inheritence” chains to help work around this issue.

- No surgical package updating. Updating a package requires recreating an image and re-running all downstream actions in the Dockerfile. If users are good about tracking which sources go into which image, it should be possible to just update the package but that is difficult and error prone.

- Order dependent image builds. Order matters in a Dockerfile — even when it doesn’t have to. Often times two actions have zero interaction with each other. But Docker has no way of knowing that so must assume that every action depends on all preceding actions.

- Package manager cruft.

He goes on to suggest several partial solutions, but the root cause is that our packaging infrastructure is not container-native.

DevOps: Continuous Integration (CI), Continuous Deployment (CD), Application Lifecycle Management (ALM)

Let’s focus on container-native approaches within CI, CD, and ALM. Approaches such as immutable containers change how you think about your systems with containers:

Immutable Servers are a deployment model that mandates that no application updates, security patches, or configuration changes happen on production systems. If any of these layers needs to be modified, a new image is constructed, pushed and cycled into production. The advantages of this approach include higher confidence in the code that is running in production, integration of testing into deployment workflows, verifiability that systems have not been compromised.

Once you become a believer in the concept of immutable servers, then speed of deployment and minimizing vulnerability surface area become objectives. Containers promote the idea of single-service-per-container (microservices), and unikernels take this idea even further.

Companies such as Nirmata and Mesosphere (with Velocity) have been pushing similar messages; notice that containers are in the process of changing the best practices for DevOps reflecting the need for container-native and not general purpose tools:

“[Containers] require new tooling for automation and management of applications.”

Workflow Processing and Orchestration

Containers have also enabled the increasing prominence of serverless computing & microservices architectures. Matt Miller of Sequoia recently wrote that containers have “created a standardized frame for all services” and have become a core building block of microservices architectures.

The ability to decouple components into discrete functions and services is fundamentally changing how architects are thinking about app and datacenter composition, and hence, job and task processing.

As always happens with a platform shift, a few prescient companies have already embraced the container-native approach to job and workflow processing. Notably, Amazon Web Services’ Lambda framework for serverless computing.

Companies such as Iron.io (disclosure: Bain Capital is an investor) have likewise embraced this vision and leverage container-native workflow processing with Go and micro-containers to provide enteprise solutions for reliably scaling container-centric jobs on any cloud or on-premises.

Security & Monitoring

Unsurprisingly, as containers get put into production, enterprises want the same assurances around container-based technologies that they’ve had for their legacy stacks. Security and monitoring are both key areas where older products fall short. Container-specific approaches are necessary.

Leading the charge in monitoring is Sysdig (disclosure: Bain Capital is an investor, and last week we announced a $15M Series B financing that we co-led with Accel Partners), who announced recently their partnerships with Kubernetes and Mesosphere to enable more consistent application deployment.

The popularity of Sysdig’s open source container troubleshooting solution, and the demand for their commercial monitoring product, in a competitive monitoring market with plenty of existing open source frameworks (Zabbix, Zenoss) and well-funded commercial vendors (New Relic, AppDynamics) evidences the need for a container-native product to fill the gaps left by other vendors.

In security, Sysdig’s container-native approach allows it to see every single system call in every process in every container; other noteworthy container-native approaches include CoreOS, with their rkt framework. The message that traditional security approaches do not address the challenges posed by container is resonating:

The very features of containers that make them so cost-effective and easy to use, also bring about a new set of security challenges. Containers are vulnerable to cyber attacks in three main ways: 1. Containers operate on a shared kernel, making them scalable, but also making it easier for hackers to infect all other containers running on the same host; 2. The users of virtual containers have very few controls to limit or monitor their software use, making them prime insider threats; 3. Because containers are self-contained, they often include outdated, vulnerable and non-compliant components, thus putting the entire system at risk. — Scalock.

You might argue that being container-native may not be sufficiently differentiating to produce lasting business, and that container-native may become a subset of functionality offered by legacy vendors. Certainly, many large companies will be able to develop products that are “good enough” to cope with many of container use cases. My gut though, is that it’s a paradigm shift.