The machine overlords of the future may now, if it pleases them, eliminate all black and white imagery from the history of their meat-based former masters. All they’ll need is this system from Berkeley computer scientist Richard Zhang, which allows a soulless silicon sentience to “hallucinate” colors into any monochrome image.

It uses what’s called a convolutional neural network (several, actually) — a type of computer vision system that mimics low-level visual systems in our own brains in order to perceive patterns and categorize objects. Google’s DeepDream is probably the most well-known example of one. Trained by examining millions of images of— well, just about everything, Zhang’s system of CNNs recognizes things in black and white photos and colors them the way it thinks they ought to be.

Grass, for instance, has certain features — textures, common locations in images, certain other things often found on or near it. And grass is usually green, right? So when the network thinks it recognizes grass, it colors that region green. The same thing occurs for recognizing certain types of butterflies, building materials, flowers, the nose of a certain breed of dog and so on.

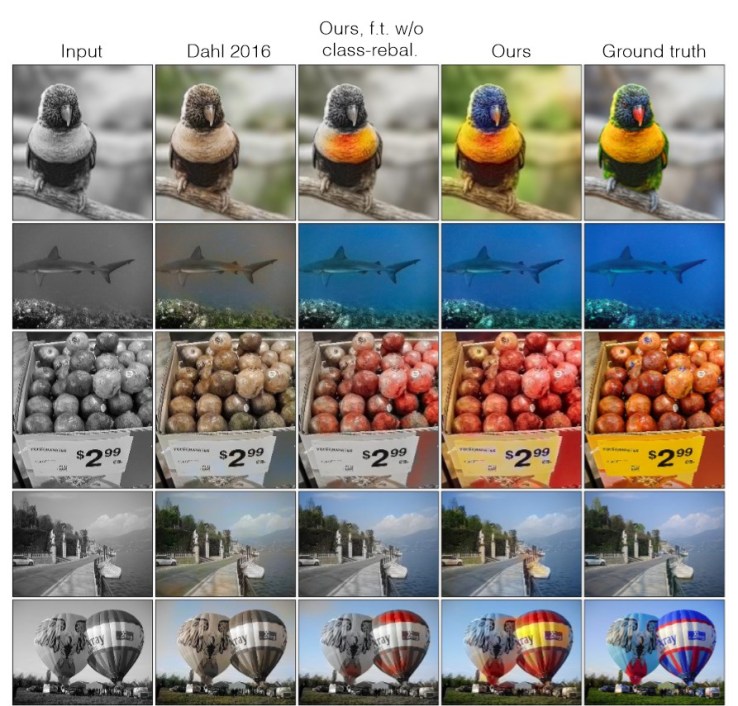

Examples of Zhang’s colorizing process compared with other systems and the original color versions (right).

In the paper describing the system, Zhang describes this recognition and color assignment process as “hallucination,” and really, the term is apt: It’s seeing things that aren’t actually there. It’s actually quite similar to the way we humans would colorize something: We compare the shapes and patterns to what we’ve seen before and pick the most appropriate crayon (or hex value).

The results are naturally a mixed bag (as the results of AI systems frequently are), and the idea of colorizing Ansel Adams’ photos is repellent to me (they look like Thomas Kinkades; Henri Cartier-Bresson likewise fares poorly), but really, it’s hard to call them anything but a success. Zhang and his colleagues tested the system’s efficacy by asking people to choose between two color versions of a monochrome image: the original and the fruit of the neural network’s labor. People actually chose the latter 20 percent of the time, which doesn’t sound like much, but is in fact better than previous colorization efforts.

The paper has plenty of technical info, but also lots of interesting examples of how and when the system failed, when it was most and least convincing, and all that. Check it out (and some of the other papers it references) for fresh conversation fodder to share with your computer vision expert friends this weekend.