Substantial thought and angst has been expended over the fact that artificial intelligence (AI) is coming to your workplace to take over blue- and white-collar jobs, alike.

But a major risk of AI has been almost entirely ignored: the threat to companies’ intellectual property (IP).

Existing laws are insufficient to protect against the unique threats posed by AI. While I am no fan of over-regulation, now is the time to call for legislative action — before it’s too late.

Leading technological and legal minds need to develop legislative safeguards that Congress can enact — ones that will sensibly protect employers’ IP from the risks of AI. I’ve coined this proposed new regulation as “The Artificial Intelligence Data Protection Act” (AIDPA).

Stephen Hawking forewarns, “Whereas the short-term impact of AI depends on who controls it, the long-term impact depends on whether it can be controlled at all.”

Initially, companies will program AI to limit disclosure of confidential information to authorized key personnel, to execute defense protocols when hacked and, in general, to comply with existing laws protecting IP. But when AI exceeds initial programming, and with morality lacking, how will the law prevent or empower redress?

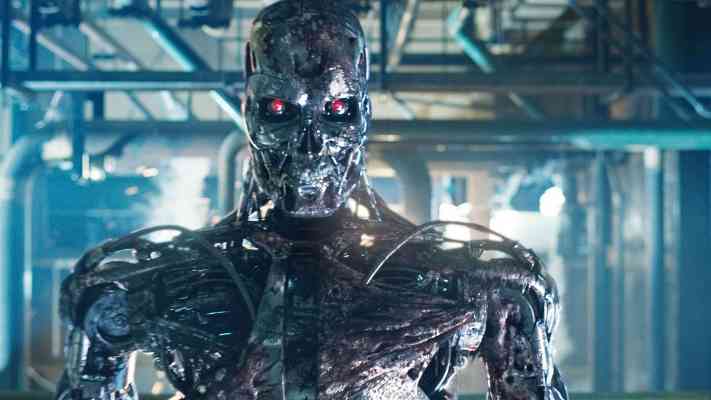

It is undeniable that AI will rapidly replace human workers, will quickly self-evolve, cannot be taught or bound by morality and that the “AI of Things” is coming. Each day, leading minds are working to enhance AI so that it evolves beyond initial programming boundaries. This includes developing methods for AI to assess a situation and determine on its own whether it will “follow” an “order/command” from a human or determine that it is in the AI-enhanced robot’s “best interest” to disregard the prompt and act in a contrary way.

Simultaneously, the ability to “teach” AI “morality” continues to elude us. This is only the beginning of what Mr. Hawking describes as “an IT arms race fuelled by unprecedented investments and building on an increasingly mature theoretical foundation.”

Just a few of many imaginable scenarios should give us pause: AI transmitting trade secrets to competitors or foreign governments; being “hijacked” by hostile actors; evolving malicious programs, withholding IP; collaborating with humans — or each other — to compromise IP.

And when AI inevitably develops new IP, how will a company detect it and establish ownership?

Existing laws are insufficient to protect against the unique threats posed by AI.

The current state and federal laws governing the protection of trade secrets all address an unlawful act by a human being, usually aimed at profiting or competing unfairly through theft. Remedies are defined in terms of monetary damages, injunctions and/or prison time.

But unlike humans, AI may compromise IP, and otherwise cloud ownership, with or without human participation or a “greedy” state of mind.

The AIDPA should start by requiring every company to designate a technically proficient “Chief AI Officer” charged with closely monitoring AI in the workplace, creating a legal duty to enact safeguards that monitor for malicious activity related to a company’s IP that exceeds AI’s programming, and potentially using a “kill switch.”

The statute ought to mandate that security-oriented AI be created alongside the functional AI that will be able to police and/or punish AI that compromise IP, and require companies to enact safeguards that minimize risks associated with utilizing, selling, transferring and programming AI. Additionally, the AIDPA should include specific criminal and civil remedies for human actors involved in IP-related offenses through the use of AI.

Finally, the regulatory framework should establish a governing body, staffed with technical and legal experts, to adjudicate issues under the AIDPA, to determine if an AI machine and/or human has violated the act, as well as appropriate remedial action.

As the world’s leader in technology and the law, we, as a society, should no doubt consider many other critical questions related to regulating AI’s access to and use of IP in the workplace. This dialogue, followed by legislative action, needs to occur now — not after AI is already hard at work.