The experience of “reality” is a result of our sensory organs delivering information about the external world to our brains via electrical stimulus. The brain interprets this input and creates our reality.

The ultimate goal of virtual reality (VR) is to achieve a true replacement for the external world as the source of these stimuli, thus creating true immersion, and a sense of “presence” that makes the virtual world seem just as real as the physical. However, achieving immersion and creating a sense of presence with VR requires overcoming many inherent challenges and technical obstacles that require inventing and applying new technologies to gaming, entertainment and multimedia.

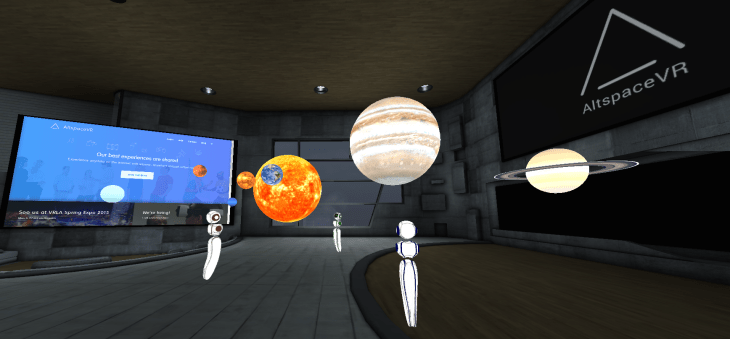

Tremendous advances in graphics technology are making it possible to achieve true immersion with hyper-realistic VR simulations. The combination of powerful graphics and computer processing, high-resolution head-mounted displays (HMDs), motion-sensing technologies and high-fidelity surround audio are enabling users to interact, manipulate and explore simulated environments within virtual worlds.

The capabilities of today’s VR technology are very good — but they still have not reached their full potential. Researchers working with computer hardware and software development, game design, human optics and neuroscience are striving to further the VR experience. Their goal is to dissolve the last barriers to achieving true immersion, and make a VR experience indistinguishable from reality. As imaginative author Terence McKenna once said, “This is what virtual reality holds out to us — the possibility of walking into the constructs of the imagination.”

Changing Our Views, And The Metrics

Supplanting the familiar, often ill-fitting eyewear that current 3D displays and projections demand, VR technology requires a head-mounted display that matches head and gesture movements with near real-time point-of-view changes within a VR simulation. To achieve true realism within a virtual environment, though, the resolutions, pixel densities and latencies of current generation displays are insufficient.

To be convincing and effective, VR has to work intimately with the human eye and visual system. Instead of the familiar “pixels-per-inch” (ppi) specifications of 2D displays, VR resolutions are defined in “field-of-view” (FOV) and measured in degrees, or “pixels-per-degree” (ppd). Placing FOV and ppd into human perspective, our eyes’ stereoscopic FOV can be more than 120o wide and 135o high, with a resolution of around 60 ppd.

Virtual reality profoundly redefines how we interact with computers.

Depending on where an object lies in our visual periphery, our sight of it may be less sensitive to fine detail (or high-resolution), but more aware of latency and rapid changes. Research into VR must account for both this requirement of highly precise rendering in particular regions of the visual spectrum and the low-latency necessities of generating the entire view-scape.

What does this all mean? Well, an immersive display capable of outputting a human eye’s expected resolution of 60 ppd requires an incredible 7.2K of horizontal and 8.1K of vertical pixels per eye — or 116.4 million pixels (megapixels) total or 16k resolution! Current displays, such as the latest home entertainment systems and VR technology, are capable of up to “only” 4K resolutions. As VR display research advances, though, 16K per-eye resolutions will likely be achievable within a few years.

But what about latency? After all, low latency is absolutely essential for true immersion, and arguably is the most important performance metric for VR.

Latency: The Achilles’ Heel Of VR

In addition to graphically rendering fully immersive and realistic worlds, VR technology has to contend with obtaining sensory input from HMDs or headsets worn by users experiencing VR. The user-perceived time between taking an action in the virtual world — typically through making a gesture or motion in the physical world — and the resulting change in the VR simulation is known as “motion-to-photon” latency.

If this latency is not kept to a minimum, the motions and orientation of the user will become decoupled from that of the virtual experience, leading to visual frame-dropping “judder” and potentially inducing the disorientation and nausea of simulation sickness. Avoiding all this requires that motion-to-photon latency never exceed a certain number, with 20 milliseconds (ms) total latency being widely considered as the “line in the sand” benchmark that is currently propelling VR development.

As the drive for photorealism in VR pushes ever-higher resolution requirements, which in turn increases what is expected from graphics-rendering hardware and software, the motion-to-photon latency must remain consistently low.

A Brave New VR World

Virtual reality profoundly redefines how we interact with computers — from the initial input through to the final display output. VR developers must be able to simultaneously increase resolution, decrease latency and incorporate intuitive inputs, such as gesture and voice recognition, to create an engrossing and immersive experience. Today’s rendering methods will not suffice, and new techniques and hardware capabilities are needed to enhance the VR experience for the user.

Developers require a VR platform that provides software functionality to simplify and optimize VR development and unlocks many unique hardware features designed to seamlessly interplay with headsets to enable smooth and immersive VR experiences.

Armed with the right tools, VR developers now have a tool set to help pave the path that will make incredible virtual journeys a reality, where the brain will believe the virtual world was indeed real.