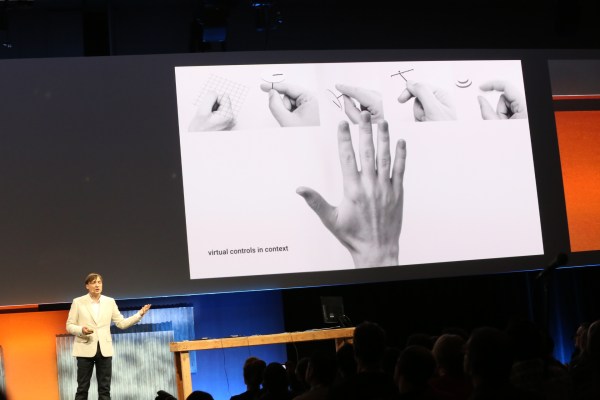

Google’s ATAP Project Soli is an attempt to harness the power of hand and finger manipulation to make it easier to interact with ever-smaller devices and screens. It posits that instead of using tools, we should use our “hand motion vocabulary” to control devices, even when the devices aren’t present.

What it does is let you control devices using natural hand motions, detecting incredibly fine motions accurately and precisely, even through materials (you could install the sensor beneath a table, for instance). It does this using radar (I’ll get to that later) and allows you to manipulate tiny or huge displays with equal accuracy, thanks to the lack of a need for touch point sizing constraints.

Haptic feedback is included, since your hand naturally provides it itself – your own skin offers friction when you touch fingertip to fingertip. Soli then is designed to reimagine your hand as its own user interface. This requires a sensor to detect motion, of course, but should have no other requirements in terms of hardware.

[gallery ids="1164759,1164760,1164758,1164757,1164755,1164754,1164753,1164752,1164751,1164750,1164748,1164747,1164746,1164745,1164744,1164743,1164742,1164741,1164740,1164738,1164733"]

Soli is designed to work in tiny devices, like smartwatches, and to work through surfaces and at a distance. ATAP found that radar was the appropriate sensor to accomplish this, satisfying all its demands except for size. They therefore set about making it smaller. Through an iterative hardware design process, they shrunk it down from about the size of a home gaming console to something smaller than a quarter.

ATAP also achieved scale production, and did it all within 10 months of development time. They also figured out how to figure out how to track minute changes in received signal to determine position of individual fingers. It responds to very slight motion, thanks to tech that transforms the signal into a number of different visualizations to arrive at a final, subtle and multidimensional message about what exactly your hand is doing.

An API will provide developers with all the translated signal information, letting them do what they want with all the various stages of interpreted data.

Soli is being targeted for broad availability later this year, in a form that’s usable in wearable devices like smartwatches. This could seriously help improve the chops of Android Wear hardware, so here’s hoping it arrives in time for a big flagship device launch this holiday season.