When most people think of stock photography, they picture poorly-framed pictures with generic-looking subjects doing everyday activities with an unnatural amount of enthusiasm.

With its collection on Getty Images, photosharing startup EyeEm has blurred the line between professional photography and the casual shooting we all do that most smartphones have decent cameras and myriad ways to share them.

In order for those photos to actually appeal to those looking to spend money for stock imagery, EyeEm had to bring in talented artists. That’s why it does internationally touring art shows demonstrating the best work on the service and partners with Foursquare and Huffington Post — to position itself as the best mobile platform for getting professional exposure.

Now that its archives are populated with so many images, the startup is building up its back end to better surface the best of the bunch, with help from talent acquired with the purchase of Sight.io back in August. In a meeting last week, CTO Ramzi Rizk showed me how the company is building up it machine-learning algorithms to better identify what’s in a photo without requiring users tag their uploads with every single object shown.

If you use the search function, you can see the early results of these efforts. A search for “flowers on black background” surfaces a bunch of photos of exactly that, with less than half (based on my testing of a bunch of similar searches) actually including relevant tags in order to bring them up. These algorithms work using the same machine-learning techniques that Google used to teach its systems what a cat is in YouTube videos, so by themselves they’re impressive but not necessarily all that new.

Now that the company has the layer of machine learning up and running (and learning new concepts every day), EyeEm is “training” its algorithms to identify which photos actually look good. By looking at things like which objects are in focus and blurred, what’s located at each third of a photo, and other identifiers of “beauty,” the ranking algorithms determine an EyeRank of aesthetic quality for each photo and applies an aggregated score to each photographer.

The scoring system isn’t perfect. It still partially relies on user engagement, which leads to some weird outcomes — for instance, I don’t know whether it was machine learning or user input that caused searches for “woman” to bring up giant photos of a woman in a pose kind of like Nicki Minaj on the cover of Anaconda near the top of results.

But in the long run, these efforts are just another angle for EyeEm to accomplish several of its goals. Theoretically, it further ensures that photographers see the service as a good place to get discovered, as they can assume that if they shoot good work, the backend will do a lot of the heavy lifting needed to get attention.

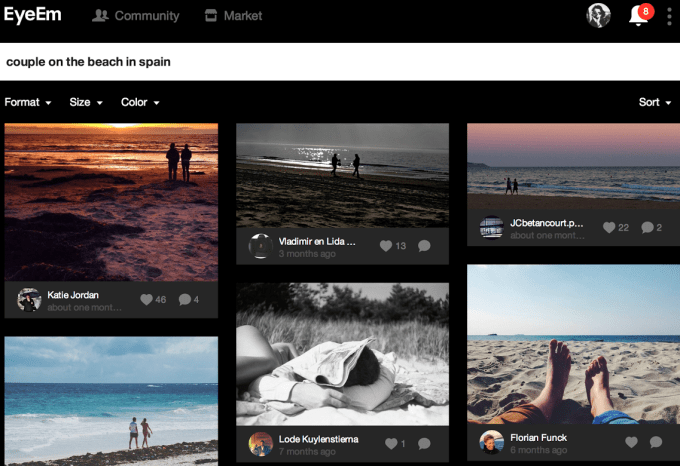

Even farther out though, it helps EyeEm shift from being a service that pushes photos out to sites like the Huffington Post and Getty Images to actually being well-trafficked “stock photography” marketplace in its own right. The EyeEm Market — which is still in beta — won’t just show you which photos match the key phrases you use to search. From the millions of photos in EyeEm’s archives, it’ll show you the ones actually worth buying.