We don’t use the “real” Facebook. Or Twitter. Or Google, Yahoo, or LinkedIn. We are almost all part of experiments they quietly run to see if different versions with little changes make us use more, visit more, click more, or buy more. By signing up for these services, we technically give consent to be treated like guinea pigs.

But this weekend, Facebook stirred up controversy because one of its data science researchers published the results of an experiment on 689,003 users to see if showing them more positive or negative sentiment posts in the News Feed would affect their happiness levels as deduced by what they posted. The impact of this experiment on manipulating emotions was tiny, but it raises the question of where to draw the line on what’s ethical with A/B testing.

First, let’s look at the facts and big issues:

The Experiment Had Almost No Effect

Check out the study itself or read Sebastian Deterding’s analysis for a great breakdown of the facts and reactions.

Essentially, three researchers, including one of Facebook’s core Data Scientists Adam Kramer, looked to prove whether emotions are contagious via online social networks. For a week, Facebook showed people fewer positive or negative posts to people in News Feed, and then measured how many positive or negative words they included in their own posts. People shown fewer positive posts (a more depressing feed) posted 0.1% fewer positive words in their posts — their status updates were a tiny bit less happy). People shown fewer negative posts (a happier feed) post 0.07% fewer negative words — their updates were a tiny bit less depressed).

Here you can see that the experiments only reduced the usage of positive or negative words a tiny amount.

News coverage has trumpeted that the study was harmful, but it only made people ‘sad’ by a minuscule amount.

Plus, that effect could be attributed not to an actual emotional change in the experiment participants, but them just following the trends they see on Facebook. Success theater may just be self-perpetuating, as seeing fewer negative posts might cause you to manicure your own sharing so your life seems perfect too. One thing the study didn’t find was that being exposed to happy posts on Facebook makes you sad because your life isn’t as fun, but again, the findings measured what people posted not necessarily how they felt.

Facebook Didn’t Get Consent Or Ethics Board Approval

Facebook only did an internal review to decide if the study was ethical. A source tells Forbes’ Kashmir Hill it was not submitted for pre-approval by the Institutional Review Board, an independent ethics committee that requires scientific experiments to meet stern safety and consent standards to ensure the welfare of their subjects. I was IRB certified for an experiment I developed in college, and can attest that the study would likely fail to meet many of the pre-requisites.

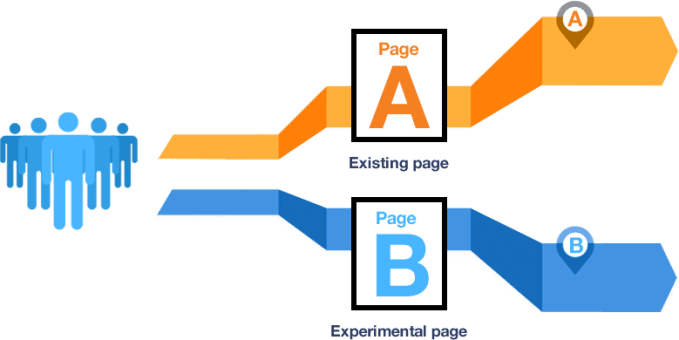

![]() Instead, Facebook holds that it manipulates the News Feed all the time to test what types of stories and designs generate the most engagement. It wants to learn how to get you to post more happy content, and spend more time on Facebook. It saw this as just another A/B test, which most major tech company, startups, news sites, and more run all the time. Facebook technically has consent from all users, as its Data Use Policy people automatically agree to when they sign up says “we may use the information we receive about you…for…data analysis, testing, research and service improvement.”

Instead, Facebook holds that it manipulates the News Feed all the time to test what types of stories and designs generate the most engagement. It wants to learn how to get you to post more happy content, and spend more time on Facebook. It saw this as just another A/B test, which most major tech company, startups, news sites, and more run all the time. Facebook technically has consent from all users, as its Data Use Policy people automatically agree to when they sign up says “we may use the information we receive about you…for…data analysis, testing, research and service improvement.”

Many consider that to be a very weak form of consent, as participants didn’t know they were in the experiment, its scope or intent, its potential risks, if data would be kept confidential, and they weren’t provided any way to opt out. Some believe Facebook should ask users for consent and offer an opt out for these experiments.

Everyone Is A/B Testing

So the negative material impact of this specific study was low and likely overblown, but the controversy vaults the ethics question into a necessary public discussion.

Sure, there are lots of A/B Tests, but most are pushing for more business-oriented results like increasing usage or clicks or purchases. This study purposefully sought to manipulate people’s emotions positively and negatively for the sake of proving a scientific theory about social contagion. Affecting emotion for emotion’s sake is why I believe the study has triggered such charged reactions. Some people don’t think the intention of the experimenter matters, because who’s to know what they really want, especially when it comes to big for-profit companies. I think it is an important factor for distinguishing what may need oversight.

Either way, there is some material danger to experiments that depress people. As Deterding notes, the National Institute Of Mental Health says 9.5% of Americans have mood disorders, which can often lead to depression. Some people who are at risk of depression were almost surely part of Facebook’s study group that were shown a more depressing feed, which could be considered dangerous. Facebook will endure a whole new level of backlash if any of those participants were found to have committed suicide or had other depression-related outcomes after the study.

That said, every product, brand, politician, charity, and social movement is trying to manipulate your emotions on some level, and they’re running A/B tests to find out how. They all want you to use more, spend more, vote for them, donate money, or sign a petition by making you happy, insecure, optimistic, sad, or angry. There are many tools for discovering how best to manipulate these emotions, including analytics, focus groups, and A/B tests. Often times people aren’t given a way to opt out.

Facebook may have acted unethically. Despite its constant testing to increase engagement which could fall into a grayer area, this experiment tried to directly sway emotions.

A brand manipulating its own content to change someone’s emotions to complete a business objective is simpler and expected. A portal manipulating the presence of content shared with us by friends to depress us for the sake of science is different.

You might guess McDonalds, with its slogan “I’m loving it” is trying to make you feel like you’re less happy without it, and a politician is trying to make you feel more optimistic if you vote for them. But many people don’t even understand the basic concept of Facebook using a relevancy-sorting algorithm to filter the News Feed to be as engaging as possible. They probably wouldn’t suspect Facebook might show them fewer happy posts from friends so they’ll be sadder in order to test a theory of social science.

In the end, an experiment with these intentions and risks may have deserved its own opt-in, which Facebook should consider offering in the future. No matter how you personally perceive the ethics, Facebook made a big mistake with how it framed the study and now the public is seriously angry.

But while Facebook has become the lighting rod, the issue of ethics in A/B testing is much bigger. If you believe toying with emotions is unethical, most major tech companies as well as those in other industries are guilty too.

Regulation, Or At Least Safeguards

So what’s to be done? The variety of companies that run these tests range from large to small, and the risks of each test fall on a highly interpretable spectrum from innocuous to gravely dangerous. Banning any testing that “manipulates emotions” would cause endless arguments about what qualifies, be nearly impossible to enforce, and could often slow-down innovation or degrade the potential quality of products we use.

But there are still certain companies with outsized power to impact people’s emotions in ways that are tough for the average person to understand.

That’s why a good start would be for companies running significant tests that manipulate emotions to offer at least an opt out. Not for every test, but ones with some real risk like showing users a more depressing feed. Just because everyone else isn’t doing it, doesn’t mean big tech companies can’t be pioneers of better ethics. Volunteering to provide a choice as to whether people want to be guinea pigs could bolster confidence amongst users. Let people opt out of the experiments via a settings page and give them the standard product that evolves in response to those experiments. Not everyone has to be put on the front lines to find out what works best. Consent is worth adding a little complexity to the product.

As for providing users some independent protection against harmful emotional manipulation on a grand scale, the Federal Trade Commission might consider auditing these practices. The FTC already has settlements with Facebook, Google, Twitter, Snapchat, and more companies to audit their privacy practices for ten to twenty years. The FTC could layer on ethical oversight for experimentation and product changes with the same goal of protecting consumer well-being. Unfortunately, it’s also settlements with the FTC that says companies can’t take away privacy controls that incentivize them not to offer any new ones.

As for providing users some independent protection against harmful emotional manipulation on a grand scale, the Federal Trade Commission might consider auditing these practices. The FTC already has settlements with Facebook, Google, Twitter, Snapchat, and more companies to audit their privacy practices for ten to twenty years. The FTC could layer on ethical oversight for experimentation and product changes with the same goal of protecting consumer well-being. Unfortunately, it’s also settlements with the FTC that says companies can’t take away privacy controls that incentivize them not to offer any new ones.

At the very least, the tech companies should educate their data scientists and others designing A/B tests about the ethical research methods associated with having experiments approved by the IRB. Even if the tech companies don’t actually submit individual tests for review, just being aware of best practices could go a long way to keeping tests safe and compassionate.

The world has quickly become data-driven. It’s time ethics caught up.