Online security is a horrifying nightmare. Heartbleed. Target. Apple. Linux. Microsoft. Yahoo. eBay. X.509. Whatever security cataclysm erupts next, probably in weeks or even days. We seem to be trapped in a vicious cycle of cascading security disasters that just keep getting worse.

Why? Well — “Computers have gotten incredibly complex, while people have remained the same gray mud with pretensions of godhood … Because of all this, security is terrible … People, as well, are broken … Everyone fails to use software correctly,” writes the great Quinn Norton in a bleak piece in Medium. “We are building the most important technologies for the global economy on shockingly underfunded infrastructure. We are truly living through Code in the Age of Cholera,” concurs security legend Dan Kaminsky.

Most of which is objectively true. And it’s probably also true, as Norton states and Kaminsky implies, that a certain amount of insecurity is the natural state of affairs in any system so complex.

But I contend that things are much worse than they actually need to be, and, further, that the entire industry has developed learned helplessness towards software security. We have been conditioned to just accept that security is a complete debacle and always will be, so the risk of being hacked and/or a 0-day popping up in your critical code is just a random, uncontrollable cost of doing business, like the risk of setting up shop in the Bay Area knowing that the Big One could hit any day.

What’s more, while this is not actually true, most of the time it is no bad thing.

I’m pleased that I was a Heartbleed hipster, dissing OpenSSL before it was cool (i.e. ten days before Heartbleed emerged into the light) but I don’t pretend to be a security expert. I do write software for a living, though … and recent events remind me vividly of the time I attended DefCon just after Cisco tried to censor/gag-order Michael Lynn.

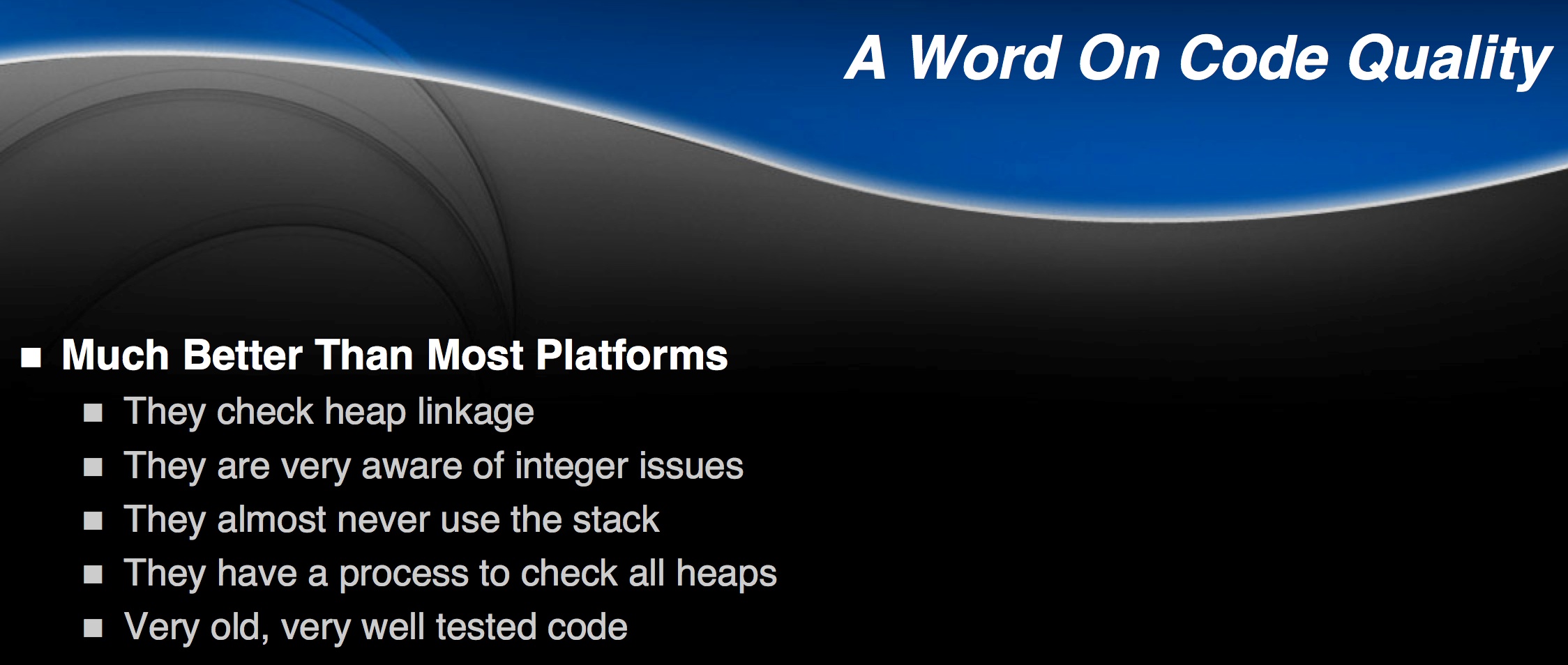

You can read the details behind the link; it’s quaint history now. But in particular, I am put in mind of this slide from his censored presentation;

Those details are largely obsolete now, but I remember that as a lo-and-the-scales-fell-from-my-eyes moment: wait, the Swiss-cheese bird’s-nest holes-the-size-of-Mack-trucks Microsoft security model doesn’t have to be the norm! People could, and actually do, design systems built for security from the ground up!

To be fair, Lynn’s talk was about exploiting a bug that existed anyway; and as Norton points out, “things are better than they used to be. We have tools … that keep the idiotically written programs where they can’t do as much harm.” But who are we kidding? We live in a world in which people still write security-critical code in C, store/send passwords in plaintext, and release hopelessly confusing security APIs. This is a world that has not much prioritized software security.

I contend that online security is so bad not because it has to be, but because there has been no systemic incentive to make it any better than it is. Sure, credit-card companies would like to reduce fraud — hence their incompetent hacks like “Verified by Visa” — but they’re still enormously profitable. Sure, eBay would rather they hadn’t been hacked; but most people will just sigh, change their passwords, and move on.

And while it’s possible to build much-more-secure systems,

A lot of people don’t really understand the incredible amount of detail and attention to every possible outcome that needs to be made, because one mistake in the entire library can bring a system down. And that’s a flaw of the type we’re seeing with Heartbleed.

to quote Seth Hardy of the University of Toronto’s Citizen Lab. (Disclaimer/disclosure; Seth’s a friend.)

Or — Kaminsky again:

Professor Matthew Green of Johns Hopkins University recently commented that he’s been running around telling the world for some time that OpenSSL is Critical Infrastructure. He’s right. He really is. The conclusion is resisted strongly, because you cannot imagine the regulatory hassles normally involved with traditionally being deemed Critical Infrastructure. A world where SSL stacks have to be audited and operated against such standards is a world that doesn’t run SSL stacks at all.

There’s actually quite a tricky implicit tradeoff here. We can slowly, carefully, write more secure (though still imperfect!) systems; or we can damn the torpedoes, steam full speed ahead, innovate like crazy, and treat security as an afterthought or a nice-to-have. The reason massive security disasters hit almost weekly these days is because for twenty years virtually the entire industry has, tacitly or explicitly, chosen the latter course.

…And, until now, for 95% of the Internet’s population, that has arguably been the right decision. Oh, it’s been awful if you’re an activist, a dissident, a journalist, a victim of identity theft, a specific target of the NSA, etc; but most people aren’t. Secure software — and it does exist — is still written by a tiny minority for a tiny minority. Sad but true. That’s one reason why it’s so often so hard to use.

The good news is that we seem to finally be nearing the point at which the Internet collectively decides that much stronger online security would probably be a good idea. The bad news is that the most powerful entity on Earth appears to be virulently, bitterly opposed to any such development. But there is no natural law requiring that software be as fragile and vulnerable as most of it is today. We as an industry allowed that to happen — and if we want to, we can fix it.

Image credit: yours truly, on Flickr, of Helix by Charles Gadeken.