For the last year or so, Google Research has been working on a project that aims to make it easier to migrate tasks between different devices. The premise of this work is that you are currently using a number of computers, smartphones and tablets every day. Even though much of your data is stored in the cloud, Google argues that “there are not many ways to easily move tasks across devices that are as intuitive as drag-and-drop in a graphical user interface.”

The small form factor of a smartphone, Google’s team argues, makes it hard to collaborate on content, and handheld projectors suffer from too many hardware limitations and don’t allow for easy user input.

Earlier this year, the company’s research team that focuses on solving this problem showed us Deep Short, a system for “capturing” an application like Google Maps that runs on a large screen via a smartphone camera and transfer it to mobile. Today, the team is turning this idea on its head and showing a cool new way to project smartphone content onto any display.

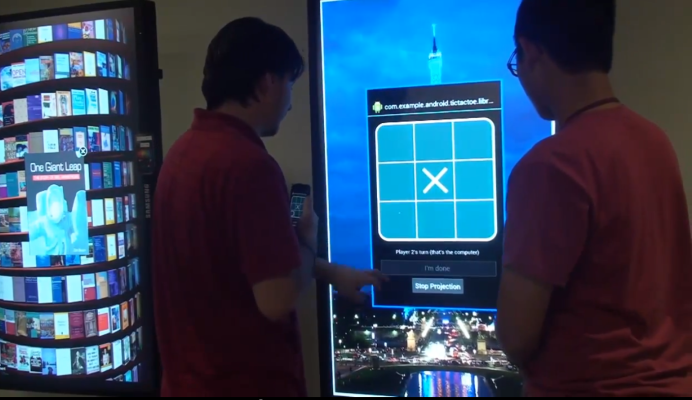

The Open Project (PDF), as Google’s researchers call it, is an end-to-end framework that can project native mobile apps onto a screen. That sounds a bit like Apple’s AirPlay, but it’s actually a bit cooler than that, especially when it’s coupled with the giant touch screen Google uses in its demo.

In its current form, Open Project displays a QR code on the display and all you need to do is point your app at it, scan the code and the app is displayed on the screen. From there, you can move it around on the screen and even interact with it just like on the phone.

A number of companies are currently looking at how they can use large touch screens to make collaboration easier. Adobe’s Project Context, for example, uses a table with a built-in touch screen and a wall of large screens in the room to allow its users to create magazine layouts. Microsoft, too, is working on similar setups based on Windows 8 and acquired Perceptive Pixel last year to experiment with large touch displays.

As always, it remains to be seen if this project ever becomes a reality. It’s definitely an easy way to share content from your phone (assuming you have a giant wall of touch-enabled screens in your house) and with a bit of added intelligence, you could even use it to just share text or images you want to collaborate on, too, with the text editor or layout engine running on the machine that powers the display.

You can find the full research paper that explains the system in more detail here.