Over a year after the announcement Of Google Glass, many folks I talk to still seem to be misunderstanding what Glass can actually do.

“It’ll be great for Augmented Reality!” they say, assuming that Glass can render objects directly into your full view of the world (it can’t.) “Ooh! It’ll be like Minority Report!”, expecting Glass’ camera to pick up your every hand wave (it doesn’t.)

Then they try on a pair and realize that… well, that’s not what Glass is. But it’s what Meta is aiming to be — and their first (read: still a bit rough) version is going on sale to the public starting today.

To picture the Meta, picture a pair of glasses — or, more accurately in its current stage, a pair of safety goggles. Put a translucent, reflective surface in each eye piece, displaying images on top of your field of view as piped out of a tiny projector built into each arm of the frames. Take a couple tiny RGB/Infrared cameras — essentially a miniature Kinect — and strap them to the frame. That’s the Meta.

The Meta then plugs into another device to help it with the data crunching; right now, that’s a laptop. Moving forward, it’ll be your phone.

After flying under the radar for a bit over a year, Meta debuted itself to the world on Kickstarter back in May. By the end of their campaign, they’d nearly doubled their original goal of $100,000. They promised to ship those units to their backers by the end of this month, and they say they’re on track to meet that deadline — so now they’re opening up pre-sales of the next iteration to everyone.

To be clear, the hardware they’re launching today is still quite early. It’s perhaps a bit past the “Developers Only” level, but it’s still mostly meant for the hardcore early-adopters and tinkerers. Hell, its early state is reflected in its very name; this model is called the META.01, suggesting many a revision to come. The META.01 units are going up for sale at $667, with plans to begin shipping in November.

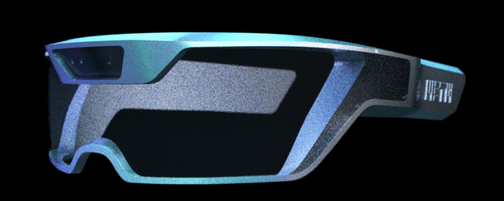

The company has pulled in a few hardware designers since their Kinect-taped-to-glasses days, allowing this iteration to be considerably more svelte than the Kickstarter variant that came before it. The Meta.01s will still be a bit more cumbersome than the final hardware they’re hoping to ship, but it’s a step in the right direction.

Here’s a render of what they’re aiming for with this next iteration (a design which they assure me they can pull off by November, though it’s not done yet):

I’ve met a number of companies that promise to do this sort of thing. Each time, I expected to be blown away. Each time, the company showed up more or less empty handed. One showed up with a pair of 3D-printed glasses with a basic camera built in, but no display of any sort to speak of. One showed up with nothing but a folio full of concept sketches and promises of grandeur.

Meta, meanwhile, showed up with multiple pairs of functional (though again, early prototype) glasses, and a bunch of working (if rough) tech demos.

Check out their concept video:

The second I put on the glasses, a number hovering in front of my eyes told me the distance between me and whatever I looked at. I held up my hand, and a floating rectangle appeared in space, following my palm wherever it went, expanding and closing as I opened and closed my fist.

“How well does it do Augmented Reality?”, I ask.

They grab a piece of paper off my desk — standard, blank printer paper, sans any sort of QR code or tracking marker. They punch a few buttons on the laptop, and a movie trailer starts playing on the paper. It’s not rendering perfectly edge to edge, mind you, instead sort of floating in the middle — but it’s still tracking this featureless piece of piece of paper remarkably well as they wave it around our office, crappy overhead fluorescent lights and all. Tracking blank white objects — be it a piece of paper, or a big blank wall — is one of the hardest computer vision challenges around.

Yet here they were. He bends the paper, the video bends with it. He crumples up the paper and unfolds it; the video starts playing again, now contorted to the crumples. What.

“How about hand gestures?”

They tap a few buttons. A 3d mushroom pops up, seeming to float about 2 feet from my view. “Poke it”, says Raymond Lo, the company’s CTO. I do, clumsily jabbing at where my brain perceives the mushroom to be. It takes a few seconds for me to “find” the ethereal fungus — but when I do, it’s immediately obvious. The mushroom changes shape around my finger like a glob of clay, completely intangible but seemingly somehow there. Meta hopes that people will someday be doing full-fledged 3D modeling with this technology, sending their creations directly to their 3D printer.

The demo eventually lost track of my hand and wasn’t able to get it back — forgivable, given the early state of the project — but for a few fleeting seconds, I was finally getting a glimpse of AR tech that so many teams had promised me before. It’s early. It’s rough. But damn, is it cool.

And I’m not the only one impressed, even in these early days. Steve Feiner, one of the world’s leading AR experts and head of the AR research department at Columbia University, is signed on as their lead advisor. Steve Mann, oft dubbed the “father of wearable computing“, is their Chief Scientist. They breezed into Y-Combinator, and I hear that investors have been knocking ever since.

The META.01 glasses are on sale beginning today at Meta’s newly acquired (and hilariously self-aware) domain, SpaceGlasses.com.