Rumors have been circulating that Google Glass may have a feature that lets you wink to take a picture. Within the kernel source code, developers have discovered that the feature does exist deep in the code, but for most users of Google Glass, this feature is not an option on the front-end.

However, TechCrunch has confirmed with multiple sources, who wish to remain anonymous for obvious reasons, that the wink feature is indeed real and being used by a small number of engineers who were seeded with the original developer units of Google Glass. In other words, those who are developing for Glass as part of the second wave of units (#ifihadglass) are not privy to the feature, as far as we know.

In fact, one source told us that Google actually came to a location to physically install an updated version of the software to unlock this feature, which appears in settings. Developers have already started building applications that employ the wink feature, but Google can also offer the command on an OS level.

Here’s how it works: At any time while Google Glass is on your head and turned on, the user may perform an extended wink (much like the one Lucille Bluth does repeatedly in Arrested Development*) to snap a picture instantly.

A second source explained to us that Glass actually trains itself to recognize your wink. In other words, you calibrate the tool so that Glass recognizes what your particular “wink” looks like. Without calibrating the length of a purposeful, command-giving wink, Glass would pick up each and every blink as a photo op. Obviously.

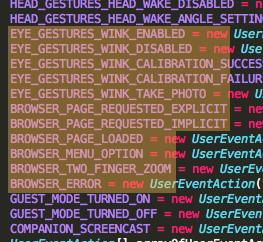

Multiple sources confirmed that the wink feature is available as an option in settings, once Google has updated the unit with the proper version of the software. The kernel also confirms this, as the code has options for “ENABLED” and “DISABLED,” as well as information on “CALIBRATION,” just like one of our sources mentioned.

Multiple sources confirmed that the wink feature is available as an option in settings, once Google has updated the unit with the proper version of the software. The kernel also confirms this, as the code has options for “ENABLED” and “DISABLED,” as well as information on “CALIBRATION,” just like one of our sources mentioned.

Sensors

Google has not clarified the exact number or names of the sensors within Google Glass, though many believe that there is both an infrared sensor on the inner portion of the headset, as well as a proximity sensor baked inside. The proximity sensor is there to handle the “waking” and “sleeping” states of your device, according to Google’s official statement.

Just like a smartphone, Google Glass will go to sleep when you put it down, halting incoming calls and messages and turning off the display (though keeping the camera button alert in case there’s a Kodak moment afoot). When you pick it up and place it on your head, it instantly wakes back up and starts receiving notifications, etc.

The infrared sensor, on the other hand, is far more mysterious. Google hasn’t really spoken about it much, though sources around the web tend to believe that the unidentified little sensor on the inner rim of the headset is indeed an infrared camera. This would allow Glass to track eye movements to some degree. As our sources have clearly confirmed, the IR camera can at the very least detect a blink and a wink, and the possibilities beyond that are deep and wide. Just take a look at these Google patents.

Patents

The first is a patent that names Adrian Wong, Google Glass engineer, Ryan Geiss, a senior software engineer at Google, and Hayes Raffie, an interactions researcher on the Special Projects team at Google.

The title? “Unlocking a screen using eye tracking information”.

The patent broadly describes a method by which a user could unlock a display (most often referenced as a Heads-up-display on a wearable computing device) through various forms of eye-tracking. Sure, unlocking a device and snapping a picture are different, and so is the method by which this patent describes unlocking and our information concerning the Google Glass wink command for pictures.

However, be well aware that there are 26 mentions of the word “infrared,” and more than 100 mentions of the term “HMD” (head-mounted display). There also seems to be a passage within the patent that confirms the ability to decipher blinks (if only to disregard them, in this instance, but still).

To unlock a screen coupled to the HMD after a period of inactivity that may have caused the screen to be locked, a processor coupled to the wearable computing system may generate a display of a moving object and detect through an eye tracking system if an eye of the wearer may be tracking the moving object. The processor may determine that a path associated with the movement of the eye of the wearer matches or substantially matches a path of the moving object and may unlock the display. The path of the moving object may be randomly generated and may be different every time the wearer attempts to unlock the screen. Tracking a slowly moving object may reduce a probability of eye blinks, or rapid eye movements (i.e., saccades) disrupting the eye tracking system. The processor may generate the display of the moving object such that a speed associated with motion of the moving object on the HMD may be less than a predetermined threshold speed. Onset of rapid eye pupil movements may occur if a speed of a moving object tracked by the eye of the wearer is equal to or greater than the predetermined threshold speed. Alternatively, the speed associated with the moving object may be independent of correlation to eye blinks or rapid eye movements. The speed associated with the motion of the moving object may change, i.e., the moving object may accelerate or decelerate. The processor may track the eye movement of the eye of the wearer to detect if the eye movement may indicate that the eye movement may be correlated with changes in the speed associated with the motion of the moving object and may unlock the screen accordingly.

Now, take a look at this patent.

Though it doesn’t go into any detail on eye-tracking, it does reaffirm Google’s intentions to use infrared sensors within their head-mounted, wearable computing devices. A year later, that device is called Google Glass.

Next Steps

Whether Google intends to roll out this feature more broadly is still unknown.

Since Google is allowing a small number of developers to use “wink,” the company is clearly staying true to its tradition of beta testing services thoroughly before a huge rollout. In fact, anyone wearing Glass right now is undoubtedly a beta tester of the whole operation.

But wink will almost certainly raise questions of privacy. If you feel like a simple, spoken “Ok glass, take a picture” is already too much of an invasion of your privacy, imagine how you’ll feel when some Glasshole can take your picture without you ever being the wiser.

On the other hand, the wink also brings up all kinds of interesting use-cases, such as the ability to determine when someone is having a seizure, for instance. People were afraid of the geolocation, and CCTV, and online banking, too, at one point in the past. And look how that turned out.

It’s too soon to tell whether Glass will fly or die, but it can sure as hell wink.