As I’ve noted before, Google’s shift to social as a company comes with great responsibility. As the company makes this transition, there are a lot of things to think about when building social layers to scale to the masses. One of those responsibilities is the safety and security of its users.

Today, Google rolled out new safety and security controls for Google+, and they include comment moderation and reporting, as well as new controls for Hangout.

The changes are important, because it lets you granularly report to Google why you are blocking someone or why their content or actions are offensive. This is information that will help the company make better decisions on its products, as well as properly carry out the proper measures, including kicking people off of the service if things are extreme.

Here’s what Google’s Pavni Diwanji had to say about these measures:

Keeping the community safe and secure

We understand how much you value safety and security online. It’s why we’ve built tools like comment moderation (http://goo.gl/3EkPV) and hangout controls (http://goo.gl/ZLiA1) into Google+ to weed out bad behavior. But we’re always looking to do better. Today we’re excited to roll out new controls that will ensure our community remains a place where people look out for each other.

Reporting content in the stream

You’ll now have more options when reporting bad posts, comments, profiles or photos. This will mean we can deal with misconduct more quickly, and prevent abuse from happening again.Reporting people in public hangouts

If you ignore someone in a hangout, we immediately mute their audio and video to keep you safe. Now when you report someone in a public hangout, we’ll also automatically record a small snippet and notify the room. We can then check for bad behavior—and once we’ve done that we’ll delete the clip.Thanks to millions of community members worldwide, Google+ is a secure and inspiring place to spend time. With today’s improvements, and your help, we’ll keep it that way.

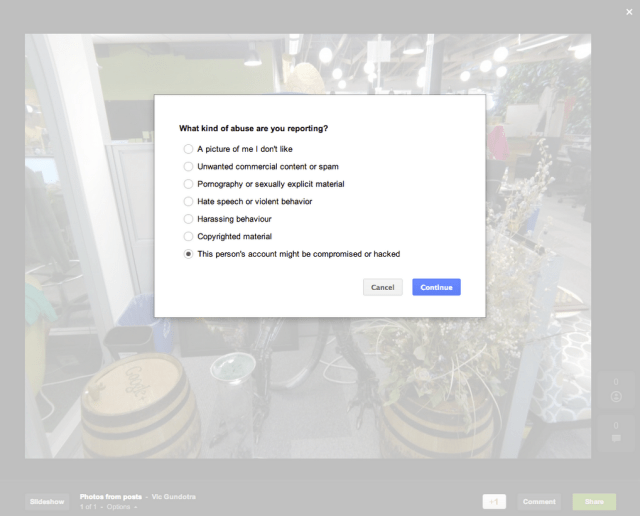

Take a look at this screen for an example, once you’ve marked someone to report:

What you see above lets you tell Google exactly what the issue is, and the addition of “this person may be hacked” is helpful. People can decide whether a friend is acting weird, not a machine. For example, if you saw me posting about how much I love strawberries, you know that it’s not me. Why? Because I’m allergic to strawberries.

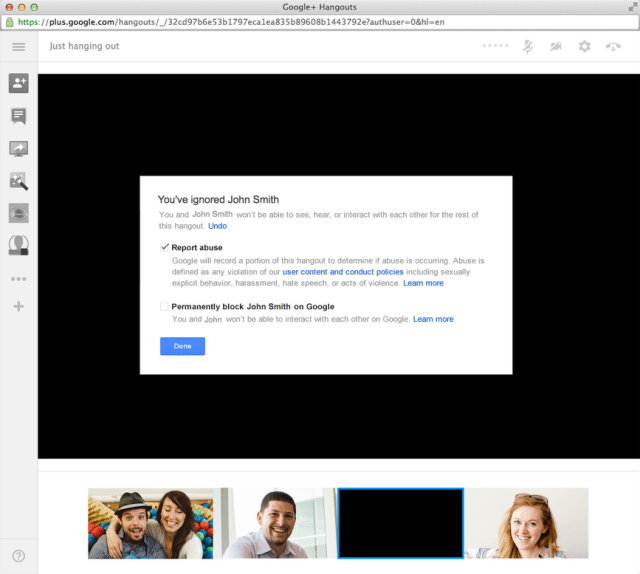

Below, you’ll see some of the actions being taken in Google+ Hangouts, which include spelling out the rules and regulations of participating in them. This helps users who are reporting issues know whether something is indeed breaking the rules or not:

Google will even record a portion of the Google+ Hangout, only when it’s a public one, when something egregious is reported. For example, this could include nudity or other things that just aren’t welcome on the system and aren’t good for the userbase.

I really like the approach to safety and security for the community that Google is taking. It’s easy to understand and it’s easy to use. Of course, all of these things will evolve over time, but by setting itself up to get more details on the actual report, the company can only get better at handling these situations in a human and social way.

[Photo credit: Flickr]