Editor’s note: This is a guest post by Jinesh Varia, Technology Evangelist, Amazon Web Services. He has been helping developers and businesses unleash the real power of the cloud since 2006. He tweets at @jinman and blogs at http://aws.typepad.com/.

As a technology evangelist, I’m constantly on the road talking to businesses and customers about their technology infrastructure. I’m frequently peppered with questions about pretty much everything related to building, running, scaling and managing apps in the cloud. And I rarely leave a meeting without talking about cost – and more specifically, how to figure out whether the cloud is cheaper or more expensive than running applications in an on-premises data center.

Weighing the financial considerations of owning and operating a data center facility versus employing a cloud infrastructure requires detailed and careful analysis. Given the large differences between the two models, it is challenging to perform accurate apples-to-apples cost comparisons between on-premises data centers and cloud infrastructure that is offered as a service. In practice, it is not as simple as just measuring potential hardware costs alongside utility pricing for compute and storage resources. The Total Cost of Ownership (TCO) is often the financial metric used to estimate and compare direct and indirect costs of a product or a service. While there are a number of different ways to calculate TCO and a number of cost factors to consider, there are two critical areas that often get ignored and should be incorporated when calculating TCO – these are personnel costs and application usage patterns.

Know your personnel cost

First, the personnel cost. While it can be extremely difficult to slice the time spent by admins on projects, especially when you have hundreds of applications spread across thousands of servers and multiple data centers, personnel costs add up quickly and are one of the most important factors to consider while calculating TCO.

The cost of acquiring and operating your own data center or leasing collocated equipment needs to factor in the related costs for what needs to be done to run even the most basic datacenter. This starts with procuring the datacenter space, arranging power, cooling and physical security, then buying the servers, wiring them up, connecting them to the storage and backup devices, building a network infrastructure, and making sure all of the hardware is imaged with the right software, provisioned and managed, and that ongoing maintenance is performed, and problems are fixed in a reasonable timeframe.

Personnel costs include the cost of the sizable IT infrastructure teams that are needed to handle the “heavy lifting” of managing physical infrastructure:

- Hardware procurement teams are needed. These teams have to spend a lot of time evaluating hardware, negotiating contracts, holding hardware vendor meetings, managing delivery and installation, etc. It’s expensive to have a staff with sufficient knowledge to do this well.

- Data center design and build teams are needed to create and maintain reliable and cost-effective facilities. These teams need to stay up-to-date on data center design and be experts in managing heterogeneous hardware and the related supply chain, managing legacy software, moving facilities, scaling and managing physical growth—all the tasks that an enterprise needs to do well if it wants to achieve low incremental costs.

- Operations staff is needed 24/7/365 in each facility.

- Database administration teams are needed to manage the MySQL/Oracle/SQL Server Databases. This staff is responsible for installing, patching, upgrades, migration, backups, snapshots and recovery of databases, ensuring availability, troubleshooting, and performance enhancements.

- Networking teams are needed for running a highly available network. Expertise is needed to design, debug, scale, and operate the network and deal with the external relationships necessary to have cost-effective Internet transit.

- Security personnel are needed at all phases of the design, build, and operations process.

While the actual personnel costs to support projects typically involve many different people, we use a simple server-to- people ratio in our cost models for the sake of simplicity. For examples of our cost models, see our whitepaper The Total Cost of (Non) Ownership of Web Applications in the Cloud. The actual server-to-people ratio can vary a lot because it depends on a number of factors such as sophistication of automation and tools and preference for virtualized vs. non-virtualized environments. We recommend that you include the personnel costs of all the people involved in building and managing a physical data center, not just the people who rack and stack servers (that’s why we’re calling the ratio “server-to-people” instead of “server-to-admin”). The TCO should factor the actual costs of procurement, management, maintenance and decommissioning of hardware resources over their useful life (which is typically 3 or 5 year period).

Know your application’s usage

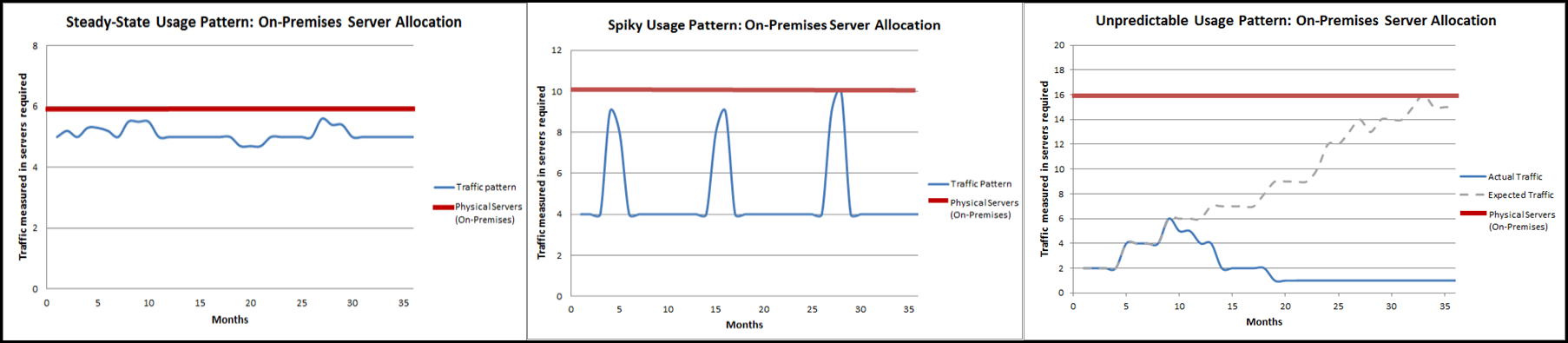

Usage traffic can dramatically affect the TCO of a web application. If you do a TCO calculation assuming a set peak capacity for your workload, when in fact it has a spiky usage pattern, such as a flood of web traffic during a sporting event, then you might not get the right cost picture in your TCO analysis. When determining TCO, you should consider the nature of the application and historical statistical data to help determine the usage pattern. While there are number of different usage patterns, the ones that we see often are:

- Steady State. The load remains at a fairly constant level over time and you can accurately forecast the likely compute load for these applications.

- Spiky but Predictable. You can accurately forecast the likely compute load for these applications, even though usage varies by time of day, time of month, or time of year.

- Uncertain and Unpredictable. It is difficult to forecast the compute needs for these applications because there is no historical statistical data available.

Below are a few graphs that show these three usage patterns:

Cloud computing allows businesses to match compute and database capacity to the usage pattern, which saves money and allows them to scale to meet performance objectives (see how). With on-premises infrastructure, you really only have one option for all three usage patterns—you have to pay upfront for the infrastructure that you think you’ll need, and then hope that you haven’t over-invested (paying for unused capacity) or under-invested (risking performance or availability issues). Moreover, if you make a mistake with the type of hardware, you are stuck with it.

While the number and types of services offered by AWS have increased dramatically, our philosophy on cloud computing pricing has not changed. You pay as you go, pay for what you use, pay less as you use more and grow bigger, and pay even less when you reserve capacity.

Cloud computing not only provides lower prices and greater flexibility, but you also get more done, can try more new ideas, and gain substantial business agility. You get to focus scarce engineering resources on initiatives that differentiate your business rather than on the undifferentiated heavy lifting of infrastructure. When you multiply the cloud computing pricing model and the resulting savings, and apply it to the hundreds of applications that your company manages, it becomes clear how powerful this model is for your applications, and the overall economics of your business or organization.