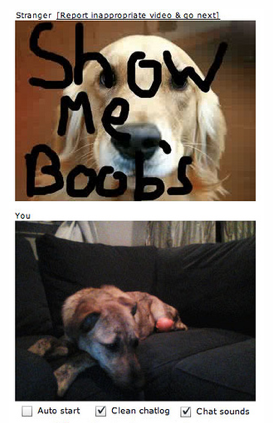

At the end of last year, social networking site myYearbook shifted its focus more towards games and introduced a live video chat feature which could have completely backfired. But instead of turning into the next Chatroulette, the site has managed to keep the unwanted live porn vids to a minimum. While Chatroulette still has an estimated nudity rate of 1 in 50 videos, myYTearbook was able to cut its nudity rate down to 1 in a 1,000. In a Q&A with myYearbook CEO Geoff Cook, he explains the strategies he used to get there.

At the end of last year, social networking site myYearbook shifted its focus more towards games and introduced a live video chat feature which could have completely backfired. But instead of turning into the next Chatroulette, the site has managed to keep the unwanted live porn vids to a minimum. While Chatroulette still has an estimated nudity rate of 1 in 50 videos, myYTearbook was able to cut its nudity rate down to 1 in a 1,000. In a Q&A with myYearbook CEO Geoff Cook, he explains the strategies he used to get there.

Q: When you decided to add live video chat to your site, what were you thinking? I mean, seriously, what were you thinking?

When we decided to build a Live Video gaming platform, the best example of Live Video at scale was Chatroulette, and it was full of porn. At the time, 1 out of every 10 video streams on Chatroulette was obscene.

Chatroulette was growing in part because it was obscene—it was the accident victim and the public was the rubbernecker. Chatroulette’s traffic peaked in March 2010—the same month that Jon Stewart screamed into the camera “I hate Chatroulette!” to end a segment that would be the service’s high water mark.

While we were bothered by the content, the visceral social experience that Chatroulette represented was compelling. We loved the serendipity of the Next button, and set out to build a service that would allow the promise of the Next button to be realized. A lot of our effort went into matching users based on location, age, and gender in real time while building out a gaming-platform to give them something to do beyond chat. Since launching in January 2011, we’ve grown to 750,000 video chats a day with 100 times less nudity than Chatroulette a year ago.

Q: How did you do it?

The core of our abuse-prevention approach is a system that enables us to capture and analyze thousands of images a second from the hundreds of thousands of daily streams. We sample the video streams of users at random, frequent intervals and then conduct processing—both human and algorithmic—on the resulting images.

Q: What did you find out from this process?

One early finding was that images with faces are 5 times less likely to contain nudity than images without faces. If you’ve ever used Chatroulette, this will make sense as the most common pornography encountered there contains a body part other than, ahem, the face. This is useful information because open-source facial recognition is relatively advanced while other-body-part detection is much less so. As a result, it is possible to use the presence of a face to limit some of the human review problem.

Q: Does the fact that there’s a face in an image mean it’s free of porn?

The mere presence of a face does not make an image clean. In fact, around 20% of nudity-containing streams also contain a face. However, with a lot of effort and additional processing logic including many factors like chat reputation, social graph, motion, etc., we’ve made the presence of a face helpful in determining “safe” images. Of course “safe” images may themselves be a false negative, and so we do human sampling of these images at a lower sample rate than images not marked “safe.”

Q: What happens once a human steps in?

The heart of our human-powered solution is a two-tiered image review organization that enables each individual reviewer to scan 400 images a minute looking for abusive content. Both groups are 24 x 7 x 365. Our goal is to be no more than 5 minutes delayed in reviewing streams. We have a zero tolerance policy. If two reviewers deem your behavior inappropriate, your account is removed and you are banned from the site forever. Based on our findings, we believe purely algorithmic approaches to moderation will never provide adequate safety.

Q: How does this compare to what Chatroulette is doing?

As our product has grown, we’ve noticed Chatroulette make some progress in reducing their nudity problem as well. On a recent night, a review of 1,500 Chatroulette video streams yielded a 1.9% abuse rate—or roughly a 1 in 50 chance of encountering nudity on any click of the Next button. This compares to a less than 1 in 1000 chance on myYearbook.

Q: Why the order-of-magnitude discrepancy?

myYearbook requires a login. While much has been made of Facebook Connect as an identity-layer that will discourage abuse, we don’t believe the identity aspect plays much of a role per se. Someone who is interested in taking down their pants will do it even on their iPhone in the now-banned iChatr app, which was quickly overrun by abuse, despite the fact that every phone can easily identify you uniquely. The more salient aspect is that there be any login.

Q: What difference does a login make?

So long as there is any login, a user’s device can be blocked—and we’ve found people who take down their pants for strangers generally lack a certain je ne sais quoi when it comes to circumventing security systems—unlike, say, spammers. We use a technology called Threatmetrix to fingerprint devices and ban both the user and their physical device when we detect abuse. Threatmetrix helps provide the teeth of our zero-tolerance policy.

Q: Couldn’t you do this with photos also?

Our system for reviewing live video has proven so successful that we are now actively engaged in bringing a similar system to bear on every photo uploaded to myYearbook. In a few months time, we will have perfect insight into every image being posted to the service, and we believe we can make incremental gains there as well by fundamentally turning a report-based system into a pro-active system. Eradicating abuse from user-generated content is a never-ending, human-and-machine-intensive problem that may well spell the difference between success and failure, especially when you are dealing with live video.

Image: Nick Bilton