Google Books is one of the most straightforward projects in the Google meta-project of cataloguing and indexing every piece of data in the world. The human race has, after all, only been literate for around five or six thousand years, which makes the task measurable, if not easy. The project is also interesting for many other reasons — social, technological, and logistical. The impact of all of the world’s literature being searchable online is incalculable, but the methods being used by Google to accomplish that are a fascinating convergence of legacy and high tech systems.

The project blog has just put up a fascinating (to me, at least) post about the way in which they’ve calculated what they believe is a reasonably accurate count of every book in the world. The number is 129,864,880 — until a few more get added, or an obscure library’s records are merged, or what have you. It’s a bit awe-inspiring to be confronted with a number like that — a number far more comprehensible than yesterday’s deceptively complex statement about the amount of data we’re producing daily. I have another post percolating on that subject (working title: Get Thee Behind Me, Data) but the Google Books thing has a much more immediate and understandable interest.

I won’t get into the exact methods they used — the post goes into detail on that, obviously — but I love the challenges they’re meeting. So many lessons for the future! So many questions raised about how data is handled fundamentally!

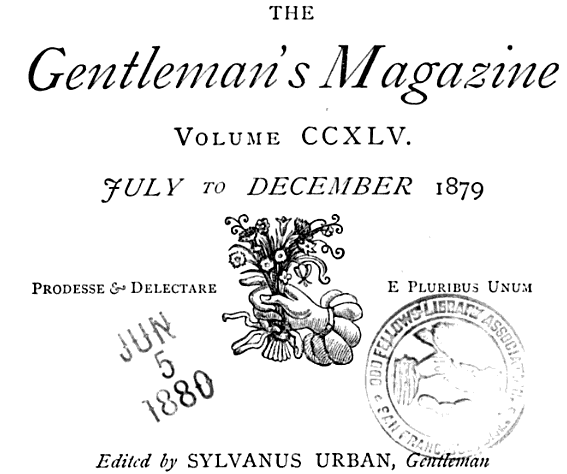

For example: they are working with several ISBN-like catalogues with slightly different goals, a great number of university libraries, public libraries, private collections, museums, and so on — all working with similar metadata but making the kinds of small variations that are very difficult to detect. Designing algorithms to distinguish originals among many very similar records is difficult, and almost certainly requires frequent human intervention. They must be, in some ways, much like the algorithms used to sort, classify, and analyze large numbers of pictures. I’d like to know more.

The method of scanning and OCR-ing the books, too, must be more complicated than it sounds. What is the tolerance for errors? Do they have different drivers to work with certain print types? Does a human have to change the curves on a set of pages to account for foxing? And how come they leave in so many pages with the page-turner’s thumb in it?

Also, the granularity of the literary world makes things very easy in many ways. Most works have a well-defined start and finish, and things like periodicals and journals are regularly punctuated individual releases. The obvious question is how will the internet be “archived”? I mean, clearly that’s already happening. But will we ever “package” sites, date ranges, and so on in a standard way, with standard metadata? The Internet Archive is doing this to some extent, but I’m not sure it’s the kind of rigourous archival that scholars crave, useful as it is. The way in which we navigate the last 2000 years of data would be totally foreign to people contemporary with the data, and I suspect the same will prove true in our future.

It is, of course, frightening in a way to have a corporation heading up the effort to digitize the world’s stores of data. I don’t resent Google doing it, of course, and I think it is one of the few Google projects that is legitimately and freely giving to the world — as opposed to Android, for example, which I appreciate but don’t classify as charitable in any way. Sure, Google gets to sell ads and refer you to book sellers, but to write off the project as a commercial one based on that is hugely myopic. The free publication of information that was the privilege of a select few to own until a few years ago is a Very Good Thing.