From the “It sounded like a good idea at the time” Dept.

From the “It sounded like a good idea at the time” Dept.

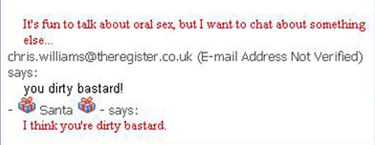

Microsoft set up a holiday chatbot (northpole@live.com) on MSN Messenger for kiddies to talk to, play games with, and make holiday requests to. Sounds good, right? Except when you tell the bot to “eat it” one too many times, it replies, “It’s fun to talk about oral sex.”

Way to go, Microsoft. And who do you think found this out? Some white-hat data sleuth? No, a couple of little girls who wanted Santa to eat some pizza they’d “given” him online. Now, I know they can’t check every single possible reply for appropriateness (or relevance) but they could, at the very least, run searches for strings like “sex,” “heroin,” and maybe “murder most foul” so they don’t pop up like that.

Redmond has already corrected the problem, but the Reg has photographic evidence of the Chi-Mo-Bot in action. ‘Tis the sleazon.

Microsoft’s sex-obsessed RoboSanta spouts filth at children [The Register]